Figure 4. A screen shot of the CxxTest graphical view within Eclipse.

Stephen Edwards

Dept. of Computer Science

Virginia Tech

660 McBryde Hall (0106)

Blacksburg, VA 24061 USA

edwards@cs.vt.edu

Phone: +1 540 231 5723

Fax: +1 540 231 6075

URL: http://people.cs.vt.edu/~edwards/

We can adapt industrial-quality tools for developing, testing, and grading Resolve/C++ programs, and use them to bring modern software testing practices into the classroom. This paper demonstrates how this can be done by taking a sample Resolve/C++ assignment based on software testing ideas, building a simple Eclipse project that handles build and execution actions for the assignment, writing all of the tests using CxxTest, and processing a solution through Web-CAT, and flexible automated grading system. Using tool support to bring realistic testing practices into the classroom has demonstrable learning benefits, and adapting existing tools for use with Resolve/C++ will allow these same techniques to be used in courses where Resolve/C++ is used.

Keywords

Software testing, CxxTest, Eclipse, JUnit, unit testing framework, test-driven development, test-first coding, IDE, interactive development environment, Web-CAT, automated grading

Software testing is a topic that does not receive full coverage in most undergraduate curricula [Shepard01, Edwards03a]. If we want to teach testing practices more effectively, it may be appropriate to integrate software testing across many--or even most--courses in an undergraduate program [Jones00, Jones01, Edwards03a]. We have had some success with this approach in our core curriculum at Virginia Tech, after integrating software testing throughout our freshman and sophomore courses.

There are a number of potential benefits to learning when software testing is included in the curriculum, since formulating software tests requires a student to formulate and write down their own understanding of how the software they are writing is intended to behave [Edwards03b]. Further, running tests requires students to experimentally verify (or refute) their understanding of what their code does. Experimental results suggest that student code quality improves as a result. One of our experiments showed an average 28% reduction in bugs per thousand lines of non-commented source code (bugs/KNCSLOC), with the top 20% of students writing their own tests achieving 4 bugs/KNCSLOC or better--comparable to commercial quality in the U.S. Students who did only informal testing on their own never achieved this level of quality, with the best students achieving approximately 32 bugs/KNCSLOC [Edwards03b].

To make software testing practices a regular part of the classroom experience, two things are critical: we must make it easy for students to write and execute tests with minimum overhead, and we must provide concrete and directed feedback on how students can improve their performance. Both of these goals are solvable with appropriate tool support.

First, using an appropriate unit testing framework can simplify test writing and execution. For Java, the JUnit framework [JUnit06] provides excellent support that is easy for students to grasp and use. Similar frameworks, which go by the name XUnit frameworks, exist for other languages as well [XProgramming06]. The problem is that no such unit testing framework exists for Resolve, or Resolve-based languages like Resolve/C++.

Second, automated grading tools can be used to provide clear and concrete feedback to students on performance. Web-CAT is one such automated grading system [Edwards03a, Edwards04]. It supports assignments where students are required to write tests for their own code. For students programming in Java or C++, it also instruments student code and collects test coverage data as student tests are executed. Students receive feedback in the form of a color-highlighted HTML source code view that highlights portions of the code that have not been executed or that have been undertested. Still, however, no such grading tools exist for Resolve or Resolve-based languages.

We can adapt industrial-quality tools for developing, testing, and grading Resolve/C++ programs, and use them to bring modern software testing practices into the classroom.

More specifically, we can adapt an appropriate unit testing framework so that it works with Resolve/C++. We can also adapt a professional-level IDE that is still suitable for classroom use. Finally, we can adapt a flexible automated grading system to work with Resolve/C++ and provide concrete feedback on correctness and testing.

While no XUnit framework exists for Resolve, why not adapt one from another language? At Virginia Tech, we have had success using CxxTest [CxxTest06] with students learning to program in C++. It is possible to use CxxTest to write unit tests for Resolve/C++ components in order to bring unit testing practices into the classroom. Further, the Eclipse-based IDE support we use for C++ development will also work for Resolve/C++ development, including full GUI support for unit test execution and viewing of results. While Eclipse is a professional IDE, it is seeing increasing use in educational settings as well [Storey03, Reis04].

Together, CxxTest plus Eclipse will provide a modern, high-impact IDE environment for developing Resolve/C++ code that will provide greater ease of use for students. Further, it will ease some of the transition out of Resolve/C++ to other languages and tools. But most importantly, it will allow industry practices regarding unit-level software testing to be included in a Resolve/C++ classroom, along with the learning benefits this approach supports.

Note that the CxxTest framework described here is completely independent of Eclipse. It can also be used via the command line or a makefile without any IDE support if desired. Both command-line and IDE approaches will be demonstrated at the workshop as part of the paper presentation.

Finally, Web-CAT provides a great deal of flexibility for automated grading tasks by providing a plug-in architecture so that instructors can extend its grading capabilities for different assignments. Plug-ins for grading C++ assignments that include student-written CxxTest-style test cases already exist, and provide support for using a commercial code coverage tool called Bullseye Coverage to give students feedback on where they can improve their testing. Web-CAT can be extended to support Resolve/C++ grading by adapting the existing C++/CxxTest plug-in to work with Resolve/C++ too.

Justification for this position comes in the form of a "proof by example". We have taken a sample Resolve/C++ assignment based on software testing ideas, built a simple Eclipse project that handles build and execution actions for the assignment, written all of the tests using CxxTest, and processed a sample solution through Web-CAT using an adapted Resolve/C++ plug-in. This section will summarize the example, show how CxxTest test cases as written, and illustrate how the Eclipse interface presents test results.

For our example, we chose

CSE

221's closed lab 5, a Resolve/C++ assignment used at Ohio State.

In this lab, students must write a test suite to demonstrate a number

of bugs in a Swap_Substring operation. Students in CSE

221 currently write test driver programs that read commands from stdin

and write output to stdout, and allow one to exercise all of the

methods under test with user-specified parameters. Students write

test cases, or entire suites of test cases, as plain text files that

can be fed to such a test driver using I/O redirection on the command

line.

Unfortunately, test inputs in such a format do not include any

corresponding expected output. Instead, textual output from the

test driver is typically captured in a separate output file.

Regression testing can be performed by comparing output from a new

test run against stored output from an earlier test run using tools

like diff. However, it is cumbersome for students to

write and maintain their own expected output, and without this step,

automated checking for correct test results is challenging.

In the closed lab 5 assignment currently being used, students simply

write a single test suite (a test input file). As part of the lab

setup, students have access to eight separate test driver programs

that are provided for them, where each test driver encapsulates a

different buggy implementation of the Swap_Substring

operation. Students also have access to a test driver that correctly

implements this operation. Students are also given a helper script

that will run a student's test input file against one buggy test

driver, also run the same test input against the correct test driver,

and then provide the student with the diff results on

the two output files. This is a form of back-to-back testing

where a known correct implementation is used as the test oracle for a

(possibly) buggy alternative implementation.

There are several disadvantages of this approach. First, students only write test inputs--they are never forced to articulate their own understanding of what the code should do, but only need write down how it should be invoked. Second, students cannot use back-to-back testing easily on new code that they write, since it requires a reference implementation that is known to be bug-free to compare against. Third, using this approach requires that one construct a test driver for each unit to be tested. This involves additional input, parsing, and output code that is not directly relevant to the task itself and that may also contain its own bugs. The more sophisticated the component to be tested, the more work must go into the test driver. Also, if one wishes to extend the testing scenario, say by allowing multiple objects to interact, or by adding a new method to the class under test, the test driver code must be extended and kept in-sync with the code being developed. Fourth, this approach does not keep all of the test information in one place. The test input is in one text file, the expected output (if the student writes it at all) is in another, and the test driver and actual calls to the class under test are in a third location inside the test driver program. Keeping these all in sync becomes more difficult as component complexity increases.

XUnit-style frameworks fix this problem by (a) making all test cases

directly executable, written directly in the programming language; (b)

allowing the expected output or behavior change to be expressed as

part of the test case itself; and (c) eliminating the need for test

drivers by providing a framework the provides all the features of a

completely reusable test driver that can work with any set of test

cases, so no input/parsing/output code need be written in order to run

tests. To see how this works, examine Figure 1, which shows a single

test case enclosed in a CxxTest::TestSuite class. This test case

is for the Swap_Substring operation from closed lab 5.

| 1 |

#ifndef SWAP_SUBSTRING_TESTS_H_ | |

| 2 |

#define SWAP_SUBSTRING_TESTS_H_ | |

| 3 |

| |

| 4 |

#include <cxxtest/TestSuite.h> | |

| 5 |

#include "RESOLVE_Foundation.h" | |

| 6 |

#include "../CI/Text/Text_Swap_Substring_1_Body.h" | |

| 7 |

| |

| 8 |

class Swap_Substring_Tests : public CxxTest::TestSuite | |

| 9 |

{ | |

| 10 |

public: | |

| 11 |

| |

| 12 |

void testSwapSubstring() | |

| 13 |

{ | |

| 14 |

// Swapping all of non-empty t1 and non-empty t2 | |

| 15 |

Text_Swap_Substring_1 t1; | |

| 16 |

Text_Swap_Substring_1 t2; | |

| 17 |

Integer pos = 1; | |

| 18 |

Integer len = 3; | |

| 19 |

| |

| 20 |

t1 = "hello"; | |

| 21 |

t2 = "world"; | |

| 22 |

| |

| 23 |

t1.Swap_Substring( pos, len, t2 ); | |

| 24 |

| |

| 25 |

TS_ASSERT_EQUALS( t1, "hworldo" ); | |

| 26 |

TS_ASSERT_EQUALS( t2, "ell" ); | |

| 27 |

TS_ASSERT_EQUALS( pos, 1 ); | |

| 28 |

TS_ASSERT_EQUALS( len, 3 ); | |

| 29 |

} | |

| 30 |

}; | |

| 31 |

| |

| 32 |

#endif /*SWAP_SUBSTRING_TESTS_H_*/ |

In Figure 1, the testSwapSubstring() method is a single

test case written as executable code. In this example, it creates an

object, calls the Swap_Substring method, and makes

assertions about the results. In other words, it encapsulates one

test case, including the setup, the test actions to be carried out,

and the behavior that should be observed if the test "passes". A

TestSuite class can contain as many of these test cases

as desired, each framed as a separate method (that is, a separate

public void method, taking no parameters, and having a name that

begins with "test"). A TestSuite class can also contain

helper methods that are reused in different test cases. Finally, a

TestSuite can even contain common "set up" actions that

are performed before each test case in the suite, as well as common

"tear down" actions performed after each test case, in order to

extract recurring pieces of infrastructure when needed.

As part of the build process, the CxxTest build support automatically

identifies the classes that are subclasses of

CxxTest::TestSuite, automatically identifies all of the

test case methods in each such class, and automatically builds the

necessary test driver to execute all of the tests the student has

written. If there is no main() procedure in the project,

then the test driver itself will provide one. Otherwise, test

execution happens as global objects are initialized, just before the

student's main() method is called.

Using CxxTest reduces the process of writing test cases to a fairly

simple coding exercise, which is something students have already

practiced. The CxxTest framework takes care of all of the other

details regarding test execution and result reporting. When run from

the command line, this single test would produce output like that

shown in Figure 2. If a buggy version of Swap_Substring

that failed this test case were used instead, the output would be

similar to Figure 3. This output is a little odd because the default

CxxTest machinery does not know how to write Resolve/C++-style values

to an output stream, but that can be remedied easily.

Running 1 test . Failed 0 of 1 tests Success rate: 100%

Running 1 test

In Swap_Substring_Tests::testSwapSubstring:

../test-cases/Swap_Substring_Tests.h:22: Error: Expected (t1 == "hworldo"), found

({ E4 09 51 00 CE 29 82 00 ... } != hworldo)

Failed 1 of 1 tests

Success rate: 0%

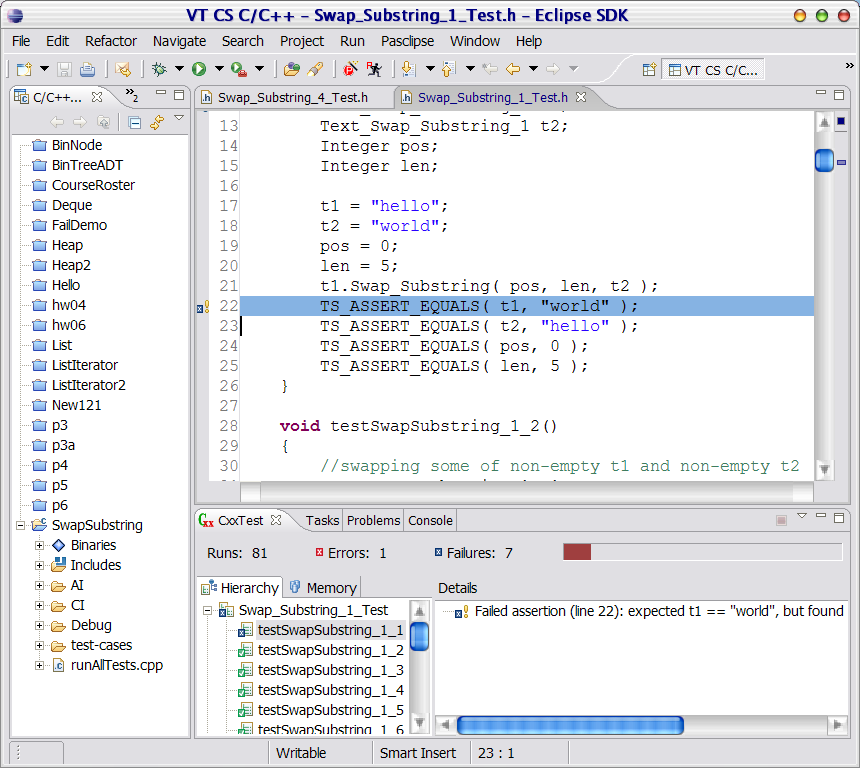

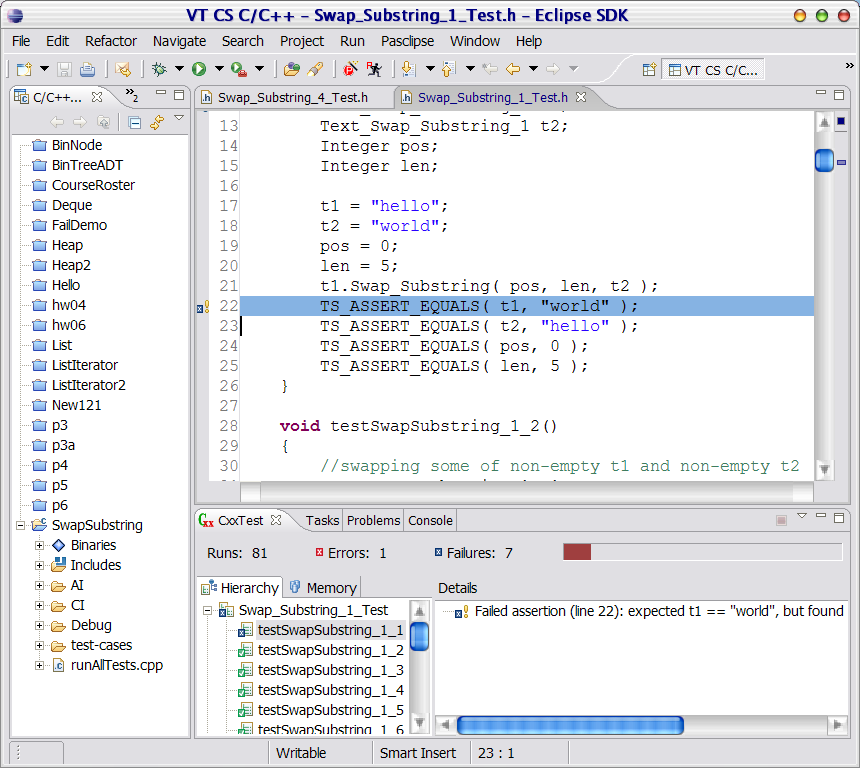

In addition to using CxxTest to write test cases, students could also use an IDE, like Eclipse, to compile and test their code. As part of our Web-CAT SourceForge project, we have a CxxTest plug-in for Eclipse that provides a graphical view of CxxTest results. Figure 4 shows a partial screen shot of the CxxTest view within Eclipse on this example.

Figure 4. A screen shot of the CxxTest graphical view within Eclipse.

Finally, we customized the CxxTest-based grading plug-in for Web-CAT so that it also supports Resolve/C++ assignments. We submitted this example. Web-CAT produces a variety of feedback to students, most of which is captured in a unified, color-highlighted HTML "print out" of the student's submission. Figure 5 provides a brief example of what this output looks like for Resolve/C++ code using the modified plug-in. You can hover your mouse over the highlighted code lines to see why specific portions have not been tested as well as necessary. Resolve/C++-specific keywords are also highlighted, thanks to the customized plug-in. More information on Web-CAT is available elsewhere [Edwards03a, Edwards03b, Edwards04].

| 1 |

// /*-------------------------------------------------------------------*\ | |

| 2 |

// | Concrete Instance Body : Text_Swap_Substring_1 | |

| 3 |

// \*-------------------------------------------------------------------*/ | |

| 4 |

| |

| 5 |

#ifndef CI_TEXT_SWAP_SUBSTRING_1_BODY | |

| 6 |

#define CI_TEXT_SWAP_SUBSTRING_1_BODY 1 | |

| 7 |

| |

| 8 |

///------------------------------------------------------------------------ | |

| 9 |

/// Global Context -------------------------------------------------------- | |

| 10 |

///------------------------------------------------------------------------ | |

| 11 |

| |

| 12 |

#include "Text_Swap_Substring_1.h" | |

| 13 |

/*!#include "CI/Text/Text_Swap_Substring_1.h"!*/ | |

| 14 |

| |

| 15 |

///------------------------------------------------------------------------ | |

| 16 |

/// Public Operations ----------------------------------------------------- | |

| 17 |

///------------------------------------------------------------------------ | |

| 18 |

| |

| 19 |

procedure_body Text_Swap_Substring_1 :: | |

| 20 |

Swap_Substring ( | |

| 21 |

preserves Integer pos, | |

| 22 |

preserves Integer len, | |

| 23 |

alters Text_Swap_Substring_1& t2 | |

| 24 |

) | |

| 25 |

{ | |

| 26 |

object Integer index = pos + len - 1; | |

| 27 |

object Text_Swap_Substring_1 tmp; | |

| 28 |

| |

| 29 |

| |

| 30 |

// Fails when swapping all of non-empty t1 and non-empty t2 | |

| 31 |

if ((self.Length () > 0) and | |

| 32 |

(t2.Length () > 0) and | |

| 33 |

(self.Length () == len)) | |

| 34 |

{ | |

| 35 |

// should be while (index >= pos) | |

| 36 |

while (index > pos) | |

| 37 |

{ | |

| 38 |

object Character c; | |

| 39 |

| |

| 40 |

self.Remove (index, c); | |

| 41 |

tmp.Add (0, c); | |

| 42 |

index--; | |

| 43 |

} | |

| 44 |

| |

| 45 |

index = t2.Length () - 1; | |

| 46 |

while (index >= 0) | |

| 47 |

{ | |

| 48 |

object Character c; | |

| 49 |

| |

| 50 |

t2.Remove (index, c); | |

| 51 |

self.Add (pos, c); | |

| 52 |

index--; | |

| 53 |

} | |

| 54 |

| |

| 55 |

t2 &= tmp; | |

| 56 |

} | |

| 57 |

else | |

| 58 |

{ | |

| 59 |

while (index >= pos) | |

| 60 |

{ | |

| 61 |

object Character c; | |

| 62 |

| |

| 63 |

self.Remove (index, c); | |

| 64 |

tmp.Add (0, c); | |

| 65 |

index--; | |

| 66 |

} | |

| 67 |

| |

| 68 |

index = t2.Length () - 1; | |

| 69 |

while (index >= 0) | |

| 70 |

{ | |

| 71 |

object Character c; | |

| 72 |

| |

| 73 |

t2.Remove (index, c); | |

| 74 |

self.Add (pos, c); | |

| 75 |

index--; | |

| 76 |

} | |

| 77 |

| |

| 78 |

t2 &= tmp; | |

| 79 |

} | |

| 80 |

} | |

| 81 |

| |

| 82 |

| |

| 83 |

void Text_Swap_Substring_1::operator =(const Text& rhs) | |

| 84 |

{ | |

| 85 |

Text::operator=(rhs); | |

| 86 |

} | |

| 87 |

| |

| 88 |

| |

| 89 |

void Text_Swap_Substring_1::operator=(const Text_Swap_Substring_1& rhs) | |

| 90 |

{ | |

| 91 |

Text::operator=(rhs); | |

| 92 |

} | |

| 93 |

| |

| 94 |

| |

| 95 |

#endif // CI_TEXT_SWAP_SUBSTRING_1_BODY |

A number of other educators have advocated including software testing across the curriculum [Shepard01, Jones00, Jones01]. An overview of related work appears elsewhere [Edwards03a, Edwards03b]. The Eclipse and CxxTest support described here have been reported in the more general context of supporting Java and C++ development as well [Allowatt05].

XUnit-style testing frameworks provide many benefits for students. They make it easier to write and execute operational tests on individual classes and methods. Once written, XUnit-style tests are completely automated. As a result, they completely automate regression testing, so that students can re-run all their tests each time they add some new code or modify a feature. When students are encouraged to write their tests as they go--"write a little test, write a little code"--tests give students greater confidence that the code they have written so far works as intended. It also gives students a better feel for how much they have completed vs. how much remains to be done, and gives students greater confidence when they repair or modify code that is already working. Finally, when students write tests this way, it siginificantly reduces or prevents big bang integration problems, since each method has been tested in isolation before classes are assembled into larger structures. In perception surveys, students report that they see these benefits themselves, and prefer to use such techniques even when they are not required in class (once they have been exposed, that is) [Edwards03b].

CxxTest provides a useful vehicle for obtaining these benefits in class when students are programming in C++. The same tools can also be used on Resolve/C++ code with no real modification needed. Further, tool support--like Eclipse's CDT and Virginia Tech's CxxTest support for students--can also be used on Resolve/C++ programs with no modification. While this leaves some cosmetic issues unaddressed, it points in a promising direction away from simple text-based test driver programs as a way to teach students about software testing, as well as introducing software testing practices into more and more Resolve/C++ class activities.