Publications

Publications in reverse chronological order. CVPR, ECCV, and ICCV are the top conferences in computer vision. ACL, EMNLP, and NAACL are the top conferences in natural language processing. NeurIPS, ICLR and ICML are top-tier conferences in machine learning generally.

2026

-

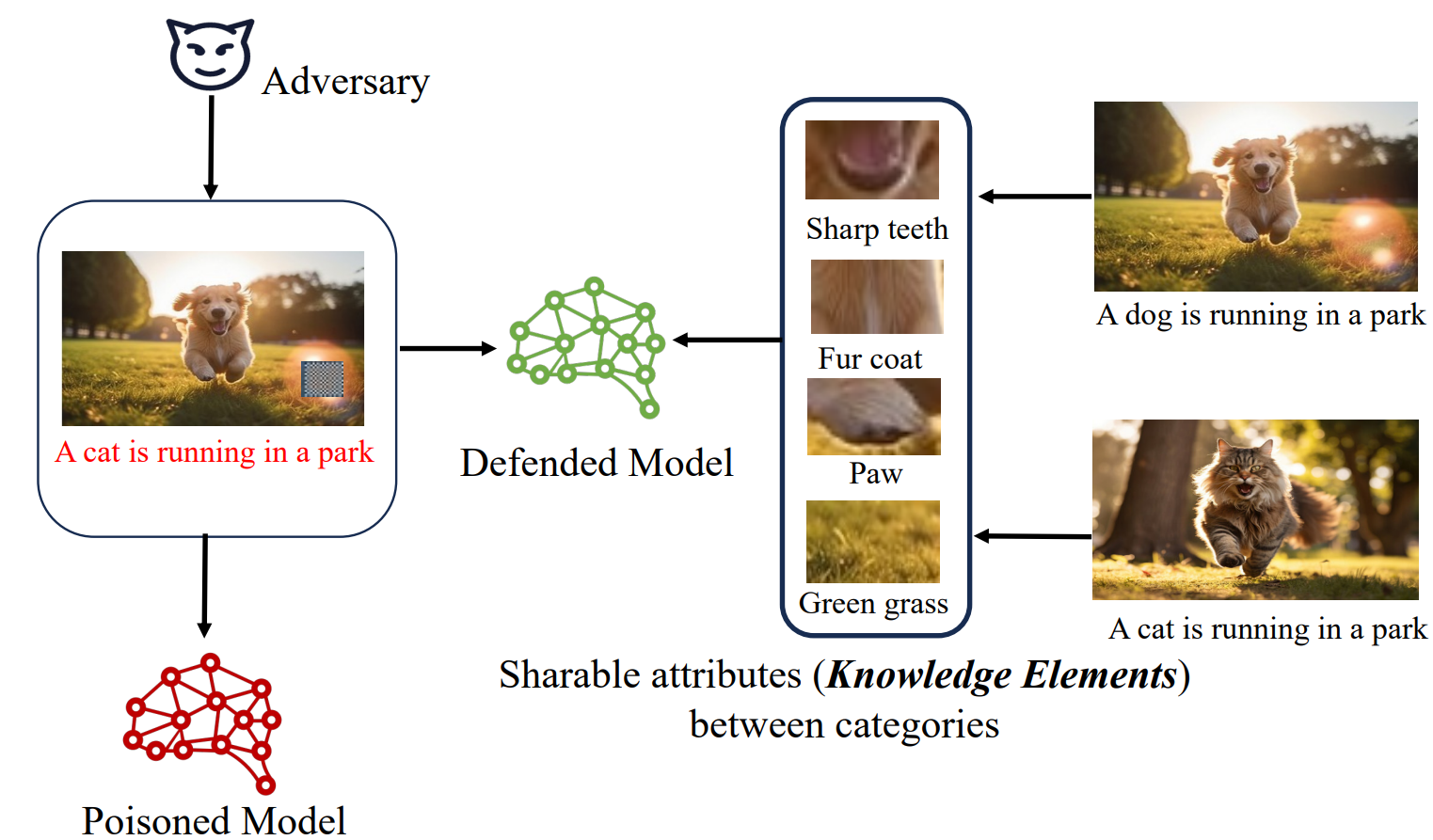

LAMP: Learning Universal Adversarial Perturbations for Multi-Image Tasks via Pre-trained ModelsIn Proceedings of the AAAI Conference on Artificial Intelligence (AAAI-26), 2026

LAMP: Learning Universal Adversarial Perturbations for Multi-Image Tasks via Pre-trained ModelsIn Proceedings of the AAAI Conference on Artificial Intelligence (AAAI-26), 2026

2025

- NeurIPSWENTER: Event Based Interpretable Reasoning for VideoQAIn Proceedings of the Advances in Neural Information Processing Systems, Multimodal Algorithmic Reasoning Workshop, 2025Spotlight

- WACVAdvancing chart question answering with robust chart component recognitionIn 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2025