The interactive demo result is available http://sangatgracenote.github.io/lyric.html.

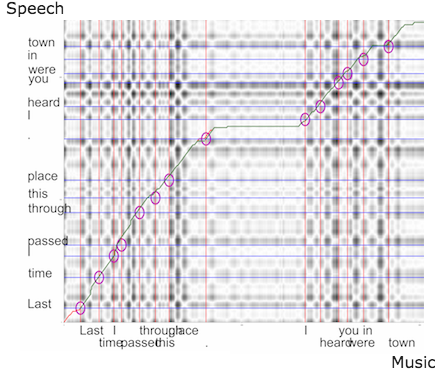

The massive amount of digital music data available necessitates automated methods for processing, classifying and organizing large volumes of songs. As music discovery and interactive music applications become commonplace, the ability to synchronize lyric text information with an audio recording has gained interest. This project presents an approach for lyric-audio alignment by comparing synthesized speech with a vocal track removed from an instrument mixture using source separation. We take a hierarchical approach to solve the problem, assuming a set of paragraph-music segment pairs is given and focus on within-segment lyric alignment at the word level. A synthesized speech signal is generated to reflect the properties of the music signal by controlling the speech rate and gender. Dynamic time warping finds the shortest path between the synthesized speech and separated vocal. The resulting path is used to calculate the timestamps of words in the original signal. The system results in approximately half a second of misalignment error on average.

Papers

- Word level lyrics-audio synchronization using separated vocals. (paper) Lee, S. W., Scott, J. In Proceedings of 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), NEW ORLEANS, LA, 2017.