Specifying and Verifying Collaborative Behavior in Component-Based Systems

Abstract

In a parameterized collaboration design, one views software as a collection

of components that play specific roles in interacting, giving rise to collaborative

behavior. From this perspective, collaboration designs revolve around reusing

collaborations that typify certain design patterns. Unfortunately, verifying

that active, concurrently executing components obey the synchronization

and communication requirements needed for the collaboration to work is

a serious problem. At least two major complications arise in concurrent

settings: (1) it may not be possible to analytically identify components

that violate the synchronization constraints required by a collaboration,

and (2) evolving participants in a collaboration independently often gives

rise to unanticipated synchronization conflicts. This paper briefly overviews

and summarizes a solution technique that addresses both of these problems.

Local (that is, role-to-role) synchronization consistency conditions are

formalized and associated decidable inference mechanisms are developed

to determine mutual compatibility and safe refinement of synchronization

behavior. It has been argued that this form of local consistency is necessary,

but insufficient to guarantee a consistent collaboration overall.

As a result, a new notion of global consistency (that is, among multiple

components playing multiple roles) is briefly outlined: causal process

constraint analysis. Principally, the method allows one to: (1) represent

the intended causal processes in terms of interactions depicted in UML

collaboration graphs; (2) formulate constraints on such interactions and

their evolution; and (3) check that the causal process constraints are

satisfied by the observed behavior of the component(s) at run-time.

Keywords

Verification, testing, reuse, collaborative behavior, synchronization,

parameterized collaboration.

Paper Category: technical paper

Emphasis: research

1. Introduction

Although a considerable amount of research has been devoted to component

and composition-based concepts and techniques, the impact of this technology

on component certification has yet to be fully explored. The clients of

reusable components will expect the parts they purchase and use to work

correctly with respect to their catalog descriptions in a given new context.

Therefore, a concrete component must be trustworthy; that is, it must correctly

implement the abstract service specification on which its clients will

depend. Since a reusable component is developed with specific contextual

assumptions and is unlikely to comply completely and without conflicts

with the domain of interest, the challenge of certification with reusable

components is far greater than that of conventional software. For tractability

purposes of assuring correctness of applications developed from reusable

components, local certifiability principles are advocated to facilitate

establishment of component properties outside of the context in which they

are embedded [Weide & Hollingsworth, 1992].

1.1 Local Certifiability Notion in Component-Based Systems

RESOLVE discipline provides a framework and language that facilitates achievement

of modular verification of correctness of functionality. The discipline

enables local or component-wise certification to demonstrate that a concrete

component (i.e., realization) achieves the functional behavior of its abstract

specification (i.e., concept). The certification is modular and local since

no assumptions or references are made with respect to the behavioral features

of clients. The composition of a component is argued to work correctly

as long as the clients interact with the reused component in conformance

with its expectations in a sequential context.

However, the verification as well as reuse of concurrent components

faces challenges not seen, for example, in most UI toolkits and data structure

libraries [Lea 1999]. Factors such as specification of design policies

(i.e., synchronous or asynchronous, local or remote, callback, forwarding,

or delegation), reflective, multilevel design (composite components), security,

safety, liveness, fault-tolerance, recovery policies make specification,

certification, and reasoning of reusable components difficult. Furthermore,

components cannot be built in isolation; instead, they must observe compatibility

constraints that are often phrased as architectural rules [Lea, 1999; Dellarocas,

1997] and synchronization constraints, especially in concurrent settings.

Development with reuse requires determining if the certified properties

and constraints of a component would facilitate its playing certain roles

to establish a specific purpose in the context into which it is embedded.

This paper briefly summarizes an approach, called collaborative behavior

verification, that aims to facilitate the examination of interaction dynamics

(synchronization constraints) of components in concurrent settings to assure

that they can fit and play the roles required by the parameterized collaborations

into which they are embedded.

1.2 The Terminology in Collaborative Behavior Verification

Approach

Software designs consist of components, roles, and, collaborations. Actors

that represent the components play roles collaboratively to implement collaborations.

Collaborations are descriptions of how a group of components jointly behave

when configured together in specific ways. A parameterized collaboration

represents a design construct that can be used repeatedly in different

component designs. The participants of the collaboration, including classifier

roles and association roles, form the parameters of the generic collaboration.

The parameters are bound to particular model elements in each instance

of the generic collaboration. Such a parameterized collaboration can capture

the structure of a design pattern. Each role is associated with a type

specification, called interaction policy. Interaction policies are language

independent specifications of external interface and visible synchronization

behavior that dictate the patterns of interchange of messages among collaborating

peers. A role describes a collaboration participant fulfilled by a specific

component when instantiated with a particular connector. Hence, a component

can play one or more roles. The context into which the component is embedded

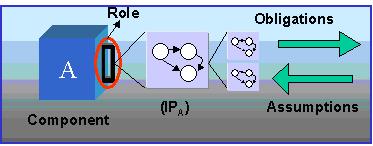

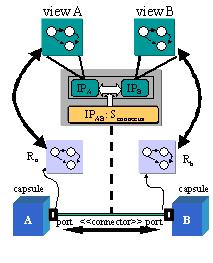

needs to be aware of the assumptions, as shown in Figure 1, regarding the

correct usage of the component. The component also publishes its obligations

indicating that as long as the context uses the component in conformance

with its assumptions, it will guarantee the interaction behavior denoted

by the obligations.

Figure 1: Assumptions and Obligations of a Role

This paper is organized as follows. In section 2 we identify and formulate

the problem. Sections 3 and 4 outline and summarize our approach to local

consistency, while section 5 is devoted to the global consistency

notion. In section 6, we overview the related work in collaboration design

and behavior verification. Finally, in section 7 we conclude and

discuss contributions of our work.

2. The Problem: The Local and Global Consistency Notions

of Collaborative Behavior for Parameterized Collaborations

In a parameterized collaboration design, one views software as a collection

of components that play specific roles in interacting, giving rise to collaborative

behavior. From this perspective, collaboration designs revolve around reusing

collaborations that typify certain design patterns. Each component in a

collaboration can have one or more roles. Unfortunately, verifying that

active, concurrently executing components obey the synchronization and

communication requirements needed for the collaboration to work is a serious

problem. At least two major complications arise in concurrent settings:

(1) it may not be possible to analytically identify components that violate

the synchronization constraints required by a collaboration, and (2) evolving

participants in a collaboration independently often gives rise to unanticipated

synchronization conflicts. These complications lead to the formulation

of the following questions that will be addressed in this dissertation:

-

Given generic parameterized collaborations and components with specific

roles, how do we verify that the provided and required synchronization

models are consistent and integrate correctly?

-

How do we guarantee that the synchronization behavior is maintained

and kept consistent with the collaboration constraints as the roles and

collaboration are refined during development?

-

How do we verify if the emergent cross-cutting synchronous causal behavior,

which arises due to the causal interference, interaction, and coordination

of components, is as intended? Furthermore, how do we determine whether

the coordination of roles played by each used component is evolved as expected

during the evolution of the collaboration as the system is maintained?

There are several questions that this research seeks to answer: Which representation

scheme and model should be used to represent synchronization contracts

(i.e., interaction policies) of a component at the abstract level? What

are the necessary and sufficient conditions and corresponding decision

procedures that facilitate tracking and maintaining semantic dependencies

between components based on the pertinent synchronization model? That is,

how do we verify that the roles integrate correctly, and how do we ensure

that the incremental enhancements to collaboration role models are consistent

with the original commitments made at previous levels of abstractions?

Furthermore, given that the used components fit into the roles expected

from them, is the actual emergent collaborative behavior, which arises

from the cascading of local interactions, achieved or evolved the way intended

by the designer?

2.1 Local Role Consistency in Collaboration-Based Software

Design

In the establishment of a market for software components, the separation

of specification and implementation is a well-known prerequisite.

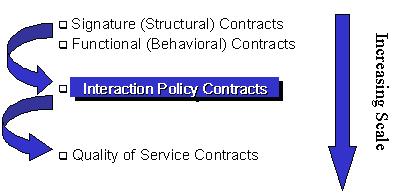

Figure 2: Reuse Contracts

Clients choosing the components based on their catalog descriptions

(i.e., contract specifications) expect them to behave correctly with respect

to their published contracts. In general, as shown in figure 2, contracts

can be divided into four main levels with increasingly negotiable properties

[Beugnard et al. 1999]. The first level, that of basic contracts,

is required to simply define the signature and types. The second level,

based on behavioral contracts, improves the level of client confidence,

particularly in a sequential context. The third level, emphasizing the

synchronization and interaction pattern contracts, improves confidence

particularly in concurrent and distributed settings. The fourth level,

quality of service contracts, quantifies the quality of service. Although

consistency notions exist for functional and structural contracts, still

missing with regard to collaboration-based software with collaborative

behavior reuse is a general consistency notion that emphasizes synchronization

contracts. Hence, determining methodologically whether the synchronization

constraints of used components’ roles fit into their local context (i.e.,

horizontal consistency) or are safely extended (i.e., vertical consistency)

is still an elusive goal.

2.2 Decision Procedures for the Analysis of Local Consistency

Conditions

Once functional and signature compatibility is established, synchronization

play a key role in assessing the fitness of components in a collaboration.

The preliminary analysis for synchronization fitness (consistency) of a

component is determined by its interaction policy compatibility with respect

to its context and depends on the interaction semantics under consideration.

Given the semantic domain used to express the synchronization consistency

conditions based on a certain interaction and request scheduling mechanism,

for correct realization, the next step involves developing decision procedures

to analyze the conditions mapped to the semantic domain. This involves

procedures determining (1) role compatibility (horizontal consistency),

(2) safe refinement (vertical consistency) of role and collaboration evolutions,

and (3) correct realization and implementation of the expected local role

interaction policies.

2.3 Causal Process Constraint Analysis for Achieving Global

Consistency

For achieving global consistency, analysis of local synchronization constraints

for horizontal and vertical consistency is necessary but insufficient.

This is due to the fact that the synchronization behavior of a role often

depends on other roles that, because of shared data state space and control

and data dependent causal connections, interfere with its interaction decisions.

Furthermore, for many component engineering tasks, including reasoning,

verification, and reuse, such causal connections depict why an artifact

is configured as it is. This is especially important when non-functional

aspects are derived from a particular design rationale based on certain

causal processes embedded in the collaboration. Hence, to achieve global

consistency, there is a need to provide methods for capturing, constraining,

and analyzing expected causal processes. Due to cascaded local synchronous

interactions, the emergent collaborative behavior designates how the component’s

behavior depends upon the composition of its constituent components and

how the component behavior is accomplished. Here, the conjecture asserts

that in order to verify that a component achieves its goal as intended

by the designer, one must show not only that the final expected condition

is satisfied but also that the components participated in the expected

causal process that resulted in the expected function. Hence, the causal

processes underlying the intended design patterns of the component designate

a certain aspect of the designer’s rationale and purpose to establish certain

non-functional as well as functional goals. In order to realize this rationale

and purpose it is essential to provide a means of (1) representing the

intended causal processes, (2) formulating constraints on causal processes

and their evolution, and (3) verifying whether the causal process constraints

are satisfied by the observed behavior of the component. In this

way, it would be possible to decide if the components (re)used in the intended

process work to achieve particular functional or nonfunctional goals.

3. Local Collaboration Consistency Notions using Concepts

of UML-RT

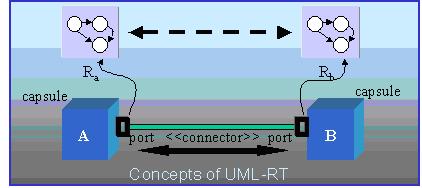

In this section the local consistency notion is illustrated in the context

of a collaborative behavior design. The utility of the methods is discussed

in the context of UML-Real Time (UML-RT), which is an extension of UML.

UML-RT places strong emphasis on UML collaboration concept, along with

notions of capsules, ports, connectors, protocols, and protocol roles.

Capsules are complex and potentially concurrent active architectural components.

They interact with their environment through one or more signal-based boundary

objects called ports. Collaboration diagrams describe the structural decomposition

of a capsule class.

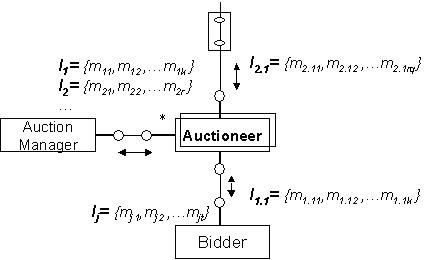

Figure 3: Components of UML-RT Role Models – The Local Perspective

Figure 3 illustrates the components of UML-RT role models. A port is

a physical part of the implementation of a capsule that mediates the interaction

of the capsule with the outside world—it is an object that implements a

specific interface. Ports realize protocols, which define the valid flow

of information (signals) between connected ports of capsules. A protocol

captures the contractual obligations that exist between capsules. Because

a protocol defines an abstract interface that is realized by a port, a

protocol is highly reusable. Connectors capture the key communication relationships

between capsules. Since they identify which capsules can affect each other

through direct communication, these relationships have architectural significance.

Collaboration diagrams, which capture architectural design patterns, are

used to describe the structural decomposition of a capsule class. They

use the primary modeling constructs of capsules, ports, and connectors

to specify the structure of software components. Capsules use ports to

export their interfaces to other capsules. The functionality of simple

capsules is realized directly by finite state machines, whose transitions

are triggered by the arrival of messages on the capsule's ports. Capsules

themselves can be decomposed into internal networks of communicating sub-capsules.

The state machine and network of hierarchically decomposed sub-capsules

allow the structural and behavioral modeling of arbitrarily complex systems.

|

|

Figure

4: Safe Refinement for Vertical Consistency

Figure 5: Horizontal Consistency

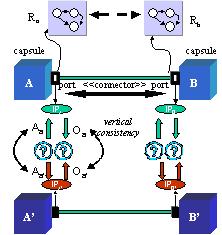

Given the formal models of assumptions and obligations, the horizontal

consistency refers to the compatibility of assumptions and obligations

of a port with respect to the obligations and assumptions of its peer capsule

port, as shown in Figure 4.

Figure 6: Connector Consistency

It is not always practical, however, to expect reused components

to be consistent with the context they are embedded. In such cases designer

develops a connector component that facilitates the mediation of interactions

between incompatible ports. Figure 6 denotes a connector projected onto

the viewpoints of the ports that it associates. Connector consistency entails

(1) the projection of the connector protocol onto its assumptions and obligations

with respect to each port and (2) horizontal consistency assessment between

the projected protocol and each connected port

Safe refinement, as shown in Figure 5, refers the consistency of the

original assumptions and obligations to the refined assumptions and obligations,

respectively, to assure that the refined component includes all the behavior

of the original role. The introduced consistency relation between the assumptions

and obligations need to be transitive and closed under the horizontal consistency

relation. Hence, as long as a component A' (abstract or concrete)

safely refines another component A, it can safely substitute A

without violating the originally commited assumptions and obligations.

4. Overview of Safe Refinement and Mutual Role Compatibility

of Interaction Policies

The certification model is based partly on the notion of augmenting an

abstraction refinement framework with concepts such as reusability and

interface compatibility. Formally representing the semantic dependencies

among abstract components and reasoning for their correct realization form

the basis of the certification model. Starting from the most abstract component,

the certification process for abstract components consists of two actions.

The first action determines the interaction policy compatibility of interaction

roles and the composition protocol consistency if a connector protocol

is declared, while the second ascertains whether the refined abstraction

is safe.

4.1 Safe Refinement of Role Interaction Policy Assumptions

The refinement of individual role interfaces is based simply on the implication

relation. Safe refinement of all roles of a particular component is essential

for safe refinement of a component. Conditions for the refinement are devised

such that the collaborating components would still be in agreement with

the refined interaction policies of the new role interface. We consider

two types of refinement, independent and simultaneous. Independent

refinement occurs when a single role interface is modified. Before mutual

compatibility of the refined role and its connected role interface can

be achieved, one must demonstrate that the refined role interface interaction

policies can at least engage in the expected interaction patterns, as dictated

by level of abstraction prior to refinement. Formally, the constraints

on the input patterns should be weaker, while the output constraints should

be stronger. Hence, the refined role interface would be able to accept

the expected input streams from its connection, as well as additional patterns

required by the refinement, while the outputs of the refined interface

should be acceptable by the input pattern assumptions of the connected

role interface. Furthermore, due to internal non-determinism of the finite

state abstraction of the protocol and interaction policies, those sets

of message traces or streams that fail to be accommodated by the refined

protocol must also shown to be failures in the original protocol. That

is, it is essential to incorporate failure semantics. The above argument

requires the refined interface to handle all the traces permissible in

the more abstract policy specification. Furthermore, all input/output stream

failures of the refined specification are also input/output failures of

the abstract specification. It is required that the output stream of the

refined policy to be equivalent to the output stream of the original interaction

policy under independent refinement. This condition is sufficient to ensure

that the output streams of the refined policy will be accepted by the receiving

role interface. In simultaneous refinement, the constraint on the set of

output streams of the refined policy is relaxed to incorporate additional

output streams. This relaxation must ensure, however, that the receiving

role interfaces and the composition protocol can consistently handle new

sets of output streams

4.3 Mutual Role Interaction Policy Compatibility

The role compatibility method uses semantic analysis to ensure the congruity

of connected role interface interaction policies. That is, interaction

policies of connected interfaces are checked to ensure that they are consistent

with each other. More specifically, collaborating components receive and

send messages in a synchronized manner; that is, when a collaboration mate

sends a message, the receiving party is shown to be in a state to receive

and act upon the message. To this end, each role interface declares the

set of input and output channels in terms of the provided (required) services

and the input (output) events. The services refer to bi-directional method

calls, whereas the events are unidirectional. The mutual role compatibility

condition formulation requires successful matching of (1) the provided

and required services and (2) the assumption and obligation constraints

of each role with respect to its peer. That is, the required services of

a particular role need to be a subset of the provided services of its mate.

Furthermore, the obligations of a role with respect to its mate should

be compatible with the assumptions of the mate component. More specifically,

the output stream constraints, called obligations, of a particular role

with respect to its mate need to match the trace constraints on or assumptions

about the input trace stream constraints of the mate component. Conversely,

the input stream trace constraints of the same role must comply with the

output stream obligation constraints of its mate. Note that the output

messages of a role interface are the input messages of its mate with opposite

directions.

4.4 Composition Protocol Consistency

Recent studies in component development and verification, as well as validation

processes, indicate that inconsistency is common throughout the development

and evolution stages. Inconsistencies occur because the reusable

components developed for different contexts have conflicting assumptions

with respect to each other. Basically, in our approach, protocol consistency

refers to the compatibility of the composition protocol with respect to

each of the role interaction policies. A composition protocol incorporates

all knowledge and rules regarding the means of coordinating and enacting

role interface interactions among the environment, component under certification,

and the associated peer components. In addition to identifying internal

rules used solely to regulate and adapt mismatched roles, it also stipulates

how and in which order the role enactment occurs. For each interaction

policy being tested by the mutual compatibility diagnosis, the composition

protocol consistency involves two stages. The first stage projects the

protocol onto the interaction policy services of the component and the

role interface services of the associated component or the environment.

The second stage consists of the mutual compatibility check, which

covers the projected interaction constraints imposed by the protocol and

the interaction policy constraints of the connected role. The composition

consistency rules indicate three requirements. First, the collaboration

mate must provide every service required by a role. Secondly, the output

channel obligation constraints of the projected role must be compatible

with the input assumptions constraints of the role interface under consideration.

Finally, the output channel obligation constraints of the role under consideration

must be compatible with the input assumption constraints of the projected

role interface derived from the original protocol.

5. How Components are Intended to Work and Evolve: Causal

Process Constraint Analysis

How components are configured and composed determines the organizational

behavior that will emerge from the conglomerates of local interactions.

Due to the state space explosion problem, deriving from the set of local

interactions a causal behavioral model depicting the computational paths

is very expensive. Hence, a new technique, which can be categorized as

hybrid simulation verification, is developed. By synthesizing an intermediate

analysis method, this technique combines the best of both worlds of run-time

testing and verification. It uses test scenario execution to drive the

component with a test engine that emulates the context of the component

under certification. Test scenario execution also limits the generation

of causal computation graphs to the computations consistent with the run-time

behavior. This limited computation graph, called the causal behavior model,

can be orders of magnitude smaller than the potential graph that could

be derived from analyzing the local interaction policies of roles.

5.1 Causal Process Constraint Specification Approach

Local interaction policies consider those roles with pair-wise local connections

that are associated with interaction constraints. They can be considered

as local integration dependencies between constituent components implementing

provided and required roles. In the context of the collaboration under

certification, the cascading of these local dependencies leads to the emergent

global integration behavior of the constituent components.

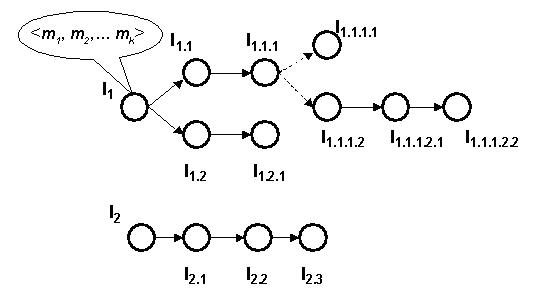

Figure 7: Causal Interaction Sequence Derivation from Collaboration

Graphs

Such global integration constraints are considered under the horizontal

integration aspect by utilizing mechanisms (i.e., UML collaboration graphs)

to specify the processes underlying the computations. This section introduces

a graph-oriented representation of causal event sequences based on the

UML collaboration graphs. A simple constraint language is provided to enable

the designer to constrain the expected causal process patterns and their

evolution. Figure 7 depicts the interaction aspect of a collaboration that

is utilized to capture causal processes that cross-cut the participant

components. Each interaction constitutes a sequence of messages exchanged

between a pair of connected roles. In figure 7, the overall interaction,

I=

{I1, I1.1, I2, I2.1 …Ij},

is decomposed into subinteractions such as I1, I1.1,

I2, I2.1 …Ij, each of which constitutes

message exchanges between peers. Note that UML collaboration interaction

semantics provides a precedence relation (i.e., partial order) among the

interactions. That is, the interaction identifiers that are distinguished

in one integer form (i.e., 1, 1.1, 1.1.1, 1.1.2, 1.1.3 and

1, 1.2, 1.2.1, 1.2.2, 1.2.3 are two such sequences) constitute a

sequence of interactions. The interaction sequences discussed above can

be viewed as directed acyclic graphs, with the nodes representing the events

and the arcs representing the causal relationships among them. This representation

makes it possible to distinguish between roles that interact and those

that do not.

Figure 8: Causal Interaction Sequences

The interaction sequencing rules of UML collaboration graphs are represented

in terms of And-Or graphs that are directed and acyclic, as shown

in Figure 8. These graphs are called causal interaction graphs. Each causal

interaction graph defines a single causal process. Each root-to-leaf sequence

of interactions is called a causal interaction sequence. Causal processes

are the expected computations that underlie services delivered by the collaboration.

The processes constrain the overall computation space by imposing a specific

causal pattern of interaction sequences. However, by themselves, causal

processes cannot relate their occurrence to the existence of other causal

processes and cannot represent the evolution of the collaborations. That

is, operators and further constraints are needed to determine whether the

occurrence of causal processes are temporally or logically related to each

other, as well as if the causal processes evolve as intended. Such

properties can be extended to check for interesting properties in the overall

causal event streams. Below, the syntactic grammar of temporal, logical,

and boolean operators on causal processes are presented:

Constraints ::= constraints ‘:’ CPDSet

CPDSet ::= CPD CPDSet | CPD

CPD ::= (always |sometimes)

Cpat | (introduce | prevent | guarantee) CPat

CPat ::= CedP | (until | unless|

since)

(CedP, CedP)

CedP ::= Ced or CedP | Ced

Ced ::= cedName and

Ced | cedName implies cedName | cedName

The constraints involve existential quantifiers (always, sometimes), evolution

operators (introduce, prevent, guarantee), temporal operators (until, unless,

since), and Boolean operators (or, and, implies). The semantics of the

operators are beyond the scope of this short paper and will be presented

elsewhere.

5.2 Overview of the Causal Process Constraint Analysis

Once a causal behavioral model is generated from the raw run-time interaction

logs of a collaboration, constraint analysis is performed to determine

if the run-time behavior satisfies causal process constraints. Due to space

limitations we are omitting the causal behavioral model derivation and

underlying causal event model here. In this section we briefly summarize

constraint analysis mechanism.

As mentioned above, the constraints against which the derived causal

behavioral model is checked impose temporal, evolution, and logical relationships

on the observed causal processes. The execution of each single test scenario

results in a set of observed causal process sequences. Constraint operators,

such as always and sometimes, help the designer decide if the certain causal

processes that are observed under all interaction scenarios enacted during

the run-time monitoring process are invariant. On the other hand, the evolution

operators involve comparisons of successive versions of the collaborative

behavior under the same test scenarios. The temporal operators, as well

as the boolean operators, impose a set of causal process patterns. The

results of the application of operator rules to satisfiability vectors

for each operand of the constraint tree are then combined to derive a global

result for quantifier and evolution constraints.

Once the behavioral models for each exercised interaction scenario are

derived and transformed into causal process sequences using the introduced

event causality model, one can view the complete observed behavior of the

component as a behavior matrix. The rows and columns of this matrix represent

the exercised scenarios and the causal process sequences derived from the

behavioral model with respect to the corresponding scenario. Formally,

the behavior matrix is a two-dimensional m x n matrix, called BM.

Here,

m stands for the number of scenarios exercised, and n

stands for the largest in cardinality of the set of causal process sequences

derived as a result of each scenario. Each cell of the matrix is denoted

as Pi,j, where i stands for the ith scenario

and j stands for the jth sequence in the set, Pi,

of causal process sequences associated with the scenario i. Hence,

Pi,j

stands for the jth sequence of the causal process sequence set

of the ith interaction scenario observed during run-time monitoring.

After defining a matrix-oriented representation of the behavioral model,

the next step is to test if the generated model satisfies constraints placed

upon it. To determine if such constraints are satisfied, the

approach requires a computational model to represent the constraints, as

well as methods and reasoning mechanisms regarding their satisfiability.

To this end, the constraint tree formalism is introduced.

Definition: A constraint tree, CT, is a finite set of

one or more nodes such that there is one designated node R called

the root of CT. The remaining nodes in CT –{R} are

also partitioned into n > 0 disjoint subsets CT1, CT2,

…, CTn, each of which is a tree, and whose roots R1,

R2, …, Rn, respectively, are children of R.

The leaf nodes that have out-degrees of zero are causal processes and the

internal nodes that have out-degrees of one or more are the operators of

the constraints.

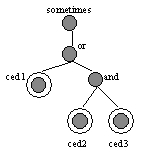

Figure 9: Constraint Tree

Constraint tree derivation: Consider the tree representation,

shown in Figure 9, of the constraint sometimes (ced1 or ced2 and ced3).

The constraint is parsed according to the constraint grammar to produce

an abstract syntax tree for the in-order representation. Then, the tree

is traversed to derive a pre-order representation of the constraint statement:

sometimes

or ced1 and ced2 ced3. Then the pre-order sequence is used to form

the constraint tree in a top-down manner.

Causal Process Analysis Summary: Causal process analysis constitutes

the methods that check for the satisfying of constraints represented in

constraint tree formalism against the behavior matrix BM = (Pi,

j) for i =1,2,…m and j = 1,2,…,k as discussed above.

The satisfying of a constraint for the recognized causal process sequences

of a scenario is represented as a boolean vector. Hence, a two dimensional

boolean matrix,

SAT = (bi,j) for i =1,2,…m

and j = 1,2,…,k is used for all interaction scenarios used in the

certification. Each boolean vector for a given interaction scenario constitutes

a row of the boolean matrix. We demonstrated the methods that check the

satisfying of constraints against the derived causal process sequences

of a particular scenario. The same method is applied for each scenario

to generate the complete boolean matrix. Let BMi denote the

ith row of the behavior matrix, which represents the set of causal

process sequences captured as a result of the ith interaction

scenario. Similarly, SATi denotes the boolean

vector that stores the computed boolean value denoting the satisfying of

each constraint by the sequences of the ith scenario. SATi

is computed gradually, using the constraint tree and the set of causal

process sequences associated with the BMi. Using the

constraint tree, the satisfiability vector is constructed from the bottom

up. Initially, each leaf node of the constraint tree is associated with

a boolean vector denoting the causal process sequences that satisfy the

causal event description depicted at that node. As the rules associated

with the operators at the internal nodes are applied to the vectors of

its children the derived vectors are propagated upward in the tree to generate

a new global boolean vector. The rules for each operator is derived using

its semantics. The resulting boolean vector at the root of the constraint

tree represents the satisfiability of the constraint with respect to the

behavioral model derived after the execution of a given test scenario.

6. Related Work

A variety of research groups have recently investigated the compositional

verification of collaboration designs. To prove certain behavioral properties

about individual and composition of collaborations, some of these groups—such

as Fisler and Krishnamurthi [2001]—employ finite state verification. Others,

such as Engels, Kuster, and Groenewegen [2001], use CSP-type process-oriented

formalisms to analyze object-oriented behavioral models for certain consistency

properties. The interface automata concept introduced by Alfaro and Henzinger

[2001] investigates light-weight formalisms that capture the temporal aspects

of software with an approach the authors call optimistic view. Additionally,

Butkevich, et al. [2000] describe an extension of the Java programming

language that supports static interaction conformance checking and dynamic

debugging of object protocols. Their approach considers only the incoming

requests and omits the output obligations of components, as well as global

causal processes. Also, the UML model integration testing approach

discussed by Hartman, Imoberdorf, and Meisinger [2001] illustrates the

issues involved in collaboration testing.

7. Conclusions

In this paper we briefly overviewed and summarized an approach to consistency

analysis and management for synchronization behavior in parameterized collaborations.

Local (that is, role-to-role) synchronization consistency conditions are

formalized and associated decidable inference mechanisms are developed

to determine mutual compatibility and safe refinement of synchronization

behavior. It has been argued that this form of local consistency is necessary,

but insufficient to guarantee a consistent collaboration overall.

As a result, a new notion of global consistency (that is, among multiple

components playing multiple roles) is briefly outlined: causal process

constraint analysis. Principally, the method allows one to: (1) represent

the intended causal processes in terms of interactions depicted in UML

collaboration graphs; (2) formulate constraints on such interactions and

their evolution; and (3) check that the causal process constraints are

satisfied by the observed behavior of the component(s) at run-time.

References

[Alfaro de L., T. A. Henzinger 2001].

“Interface Automata,” Software Engineering Notes, vol. 26, no.5, pp.109-129.

[Beugnard A., J.-M. Jezequel, N. Plouzeau, and D. Watkins 1999].

“Making Components Contract Aware,” IEEE Computer, vol. 32 no.7, pp. 38-45.

[Butkevich S., M. Raneco, G. Baumgartner, M. Young 2000],

“Compiler and Tool Support for Debugging Object Protocols,” Software

Engineering Notes, vol. 25 no. 6, pp.50-59.

[Dellarocas C. 1997].

“Software Component Interconnection Should Be Treated as a Distinct Design

Problem,” In Proceedings of the 8th Annual Workshop on

Software Reuse (WISR), Columbus, Ohio, March 23-26, 1997.

[Engels G., J. M. Kuster, L. Groenewegen. 2001].

“A Methodology for Specifying and Analyzing Consistency of Object-Oriented

Behavioral Models,” Software Engineering Notes, vol. 26,

no.5, pp.186-195

[Fisler K. and S. Krishnamurti 2001].

“Modular Verification of Collaboration-Based Software Design,” In Proceedings

of the 8th European Software Engineering Conference,

pp. 152-163.

[Lea D. 1999].

“Complex Components Create New Challenges,” In Proceedings of the 9th Annual

Workshop on Software Reuse.

[Weide W. B. and J. E. Hollingsworth 1992].

“On Local Certifiability of Software Components,” In Proceedings of the

5th Annual Workshop on Software Reuse.